Ocado Group outlines future-facing Responsible AI Framework

AI and data are in our DNA at Ocado Group, helping us to improve decision making, enhance operations and deliver a leading customer proposition.

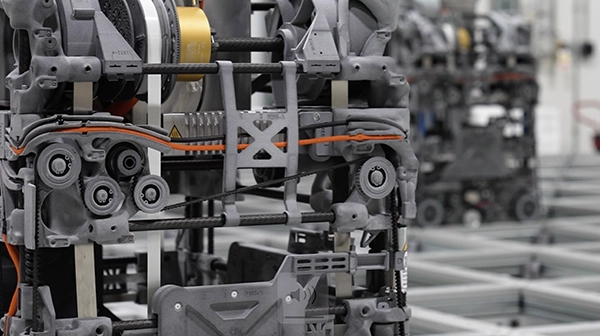

We have over 100 different AI use cases across our business, from intelligent grocery forecasting models that predict demand to deep reinforcement learning that automates the picking and packing process.

Embedding care into the AI models we build and deploy is essential to creating safe and trustworthy systems.

AI Responsibility: The Why

AI requires a different rule book than traditional software because it can be non-deterministic. This means the same input can lead to many different outcomes—and as AI continues to learn more over time, the number of these potential outcomes multiplies.

Some AI systems are even referred to as ‘black boxes’ because it can be virtually impossible to trace back the specific reasoning behind the prediction or decision they generate.

This is why it’s crucial to design and deploy AI systems thoughtfully - considering fairness, accountability, transparency & explicability throughout the AI lifecycle. This will ensure systems function as intended and prevent costly mistakes, meaning responsible AI is essentially successful AI.

And with policymakers and regulators starting to examine how AI systems work and impact society more closely, it’s even more important for businesses to make ‘AI responsibility’ a core practice.

Ocado's AI Commitments

Ocado Group has always been a disruptor, reinventing how people shop online through technology and reimagining grocery and logistics value chains. We have extensive experience testing and deploying AI solutions, and part of that has been thinking through how AI can be implemented in a trustworthy way.

More than ever though, the debate about ethics, responsibility and AI has entered the wider public conversation.

Consumers want to know that AI is governed by checks and balances and uses their data in an appropriate way. Our partners want reassurance that ‘smart’ solutions have been built robustly and will deliver the right impact. And regulators want to ensure businesses are managing risks and being transparent, without holding back growth and competition.

This debate is moving quickly, so we have published our commitments on responsible AI & Robotics to make our approach clear, and we continue to embed these within our organisation.

Our five commitments to responsible AI and robotics govern our teams, projects and processes. They cover:

- Fairness: Using high-quality, representative datasets, and mitigating against unfair bias.

- Transparency and Explicability: Ensuring systems are well-documented to demonstrate reliability and trackback issues, as well as provide an easily understandable explanation of our systems for users.

- Governance: Ensuring appropriate accountability structures are in place before internal or third-party systems are deployed. Regularly monitoring systems to check performance.

- Robustness and Safety: Integrating privacy and security into design, assessing safety considerations, and building in appropriate safeguards.

- Impact: Ensuring interactions with people are conducted with respect and empathy. Considering the impact of automation on affected staff, communicating in an upfront way and providing opportunities for re-skilling where possible.

Embedding AI Responsibility across Ocado Group

We know that AI tools and capabilities are evolving quickly, so our approach to responsibility needs to be agile in its implementation, as well as founded on solid principles.

Our approach to embedding responsible AI is flexible; requiring each of our teams to identify practical and proportionate actions relevant to their projects, and allowing them to innovate at pace.

There are some key lessons we have learned from this process which hold true for any organisation looking to make AI responsibility a practical reality.

1. Start from square one - Does everyone understand what you are trying to achieve, and (more importantly) why?

Over the course of four months we held an ‘education roadshow’ for almost 4,000 employees to generate awareness and start an open conversation around responsible uses of AI.

2. This is a live conversation, make sure you have an open forum for people to raise challenges or questions in real-time

We established an AI Responsibility forum to support those building or using AI tools. The forum gives developers the opportunity to engage with experts from other disciplines (e.g. risk, data governance or intellectual property), clarifying any questions they may have around responsible practice.

3. Have a bird’s eye view of your AI operations

This feels like an obvious one, but AI tools and uses are proliferating fast. Making sure you have a clear and comprehensive single view of where you are using AI (whether it’s developing your own or using third party solutions) is an important early step. We have a long-standing AI registry into which employees submit new or existing projects. As well as helping us to map our risk and ensure good governance, it’s also key to identifying areas where we could deploy new AI capabilities in the future.

Future focuses

We’re continuing to embrace the advantages AI provides, both in enhancing the customer experience and in unearthing new levels of efficiency for our business. Today, our core focuses include:

Innovating around Generative AI

Fast-paced teams (like ours) are always innovating. To nurture this creativity responsibly we keep a finger on the pulse of the latest generative AI tools, conducting thorough tests in-house before rolling out new applications.

Making AI accessible and understandable

Building trust and understanding of how AI works is crucial to securing confident adoption. This is why we’re focusing on drafting solid technical documentation and user explanations for our AI use cases.

Future-proofing our solutions

We know companies like ours —which have staff and operations in the EU—will fall into the scope of the recently-adopted EU AI Act. This is why we’ve been anticipating and preparing for compliance; designing robust structures, and baking requirements and practices into AI projects well ahead of expected deadlines.

The acceleration of AI ushers in a new era of responsibility for developers and users alike. Having open conversations about AI's purpose, applications, risks and opportunities will be an important part of corporate culture in the coming years and we’re confident that our AI Responsibility framework is a strong foundation to build on.

Related content

Our Sustainability Story

How we use AI